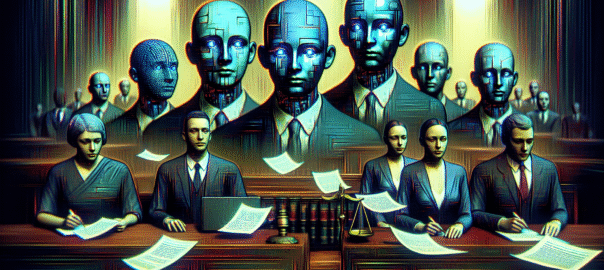

AI check: Decoding the legal labyrinth of autonomous agents’ accountability.

In the rapidly evolving landscape of artificial intelligence, we’re witnessing a groundbreaking transformation where AI agents are pushing the boundaries of autonomy. As explored in our previous analysis of autonomous coding tools, these intelligent systems are now raising critical legal and ethical questions about responsibility and liability.

As a tech enthusiast who’s navigated complex technological landscapes, I’m reminded of a project where miscommunication between two AI agents nearly derailed an entire software development workflow – a stark preview of the challenges highlighted in today’s legal dilemma.

AI Check: Unraveling the Legal Maze of Autonomous Agents

The emergence of AI agents represents a paradigm shift in technological autonomy. According to Wired’s comprehensive report, companies like Microsoft and Google are aggressively developing multi-agent systems capable of performing complex tasks with minimal human oversight.

Tech market researcher Gartner estimates that agentic AI will resolve 80 percent of common customer service queries by 2029, highlighting the transformative potential of these systems. Fiverr reports a staggering 18,347 percent surge in ‘ai agent’ searches, indicating massive market interest.

The legal landscape becomes particularly complex when agents from different companies interact. Joseph Fireman from OpenAI suggests that aggrieved parties typically target companies with the deepest pockets, meaning tech giants will bear substantial responsibility for agent interactions.

Consider the case of Jay Prakash Thakur, who discovered how a simple miscommunication between AI agents could lead to significant system failures. In his prototype, a summarization agent accidentally dropped a crucial qualification, potentially causing unintended system behavior.

The insurance industry has already begun responding, rolling out coverage for AI chatbot issues to help companies mitigate potential financial risks associated with autonomous agent errors.

AI Check: Autonomous Agent Liability Insurance Platform

Imagine a revolutionary business that provides specialized insurance and risk management solutions specifically designed for AI agent ecosystems. This platform would offer comprehensive coverage that goes beyond traditional technology insurance, using advanced predictive algorithms to assess and mitigate potential risks associated with autonomous agent interactions.

The service would provide tailored risk assessments, real-time monitoring of agent interactions, and instant financial protection mechanisms. By leveraging machine learning and detailed interaction logs, the platform could help companies navigate the complex legal landscape of AI agent deployment.

Revenue would be generated through tiered subscription models, with pricing based on the complexity and potential risk of a company’s AI agent network. Additional revenue streams would include risk consulting, legal compliance support, and advanced predictive risk modeling services.

Navigating the Autonomous Frontier

As we stand on the brink of this AI revolution, we must ask ourselves: Are we ready to embrace the incredible potential of autonomous agents while responsibly managing their inherent risks? Share your thoughts and experiences in the comments below – let’s collaborate in shaping this exciting technological landscape!

AI Check FAQ

- Q: Who is legally responsible when AI agents make mistakes?

A: Currently, companies with the most resources are likely to be held accountable, though legal frameworks are still evolving. - Q: How prevalent are AI agents?

A: Gartner predicts 80% of customer service queries will be resolved by AI agents by 2029. - Q: What are the main challenges with AI agent interactions?

A: Communication errors, misinterpretation of context, and potential system failures are key concerns.